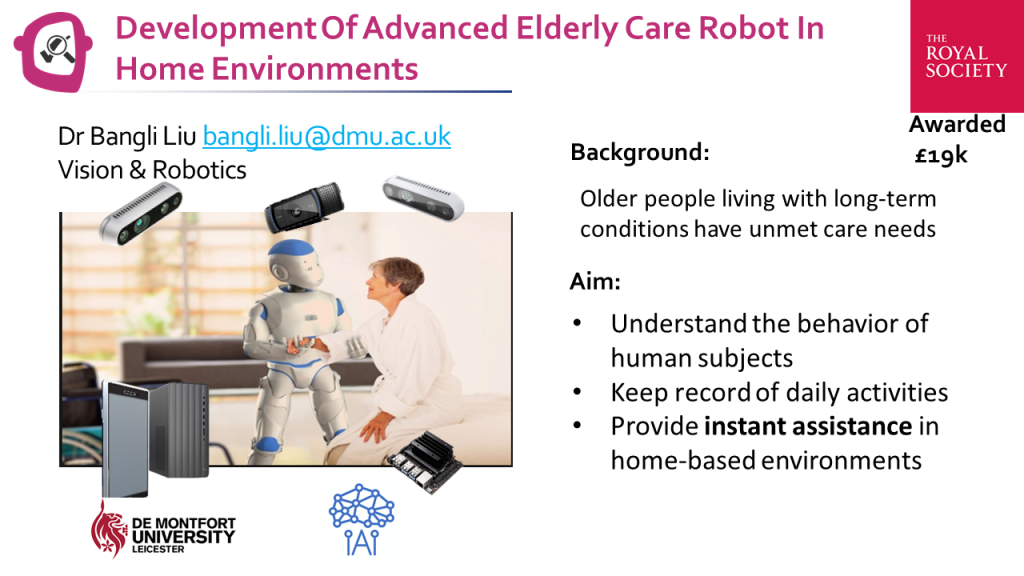

The UK is an ageing society. Currently, more than one-fifth of its population is over 60. The number of people aged 85 will double by 2041 and treble by 2066. Research shows that 82% of 85-year-olds suffer from at least one long-term condition. Robots have the great potential to mitigate the upcoming elderly care challenges. However, existing robots are still far away from delivering satisfactory care services. One of the main reasons lies in the lack of intelligence in understanding human behaviour. In this proposal, we aim to address this challenge by developing advanced human action recognition algorithms to help the robot understand the intention of human subjects and further provide instant assistance in home-based environments. Despite active research and significant progress in the last few decades, human action recognition in home-based environments remains challenging due to the occlusion, viewpoint and biometric variation, various execution rates etc. This project will develop a smart sensing platform which consists of a humanoid robot and several RGBD sensors mounted in different locations to cover the human activity areas. Advanced multi- sensor-based human action recognition algorithms will be developed to recognize human intention in various home-based environments. The system will be able to simultaneously conduct action detection and recognition in a real-time performance so that the robot can provide an instant response.